In the 90’s Don Norman, along with his colleagues Andrew Ortony and William Revelle, began to explore the role that emotions play in making products easier, more pleasurable, and effective to use. During this time period various design researchers were investigating the link between emotions (especially aesthetics) and usability. These inquiries confirmed that our affective states strongly influence our experiences, and that these states can be induced by design of product systems.

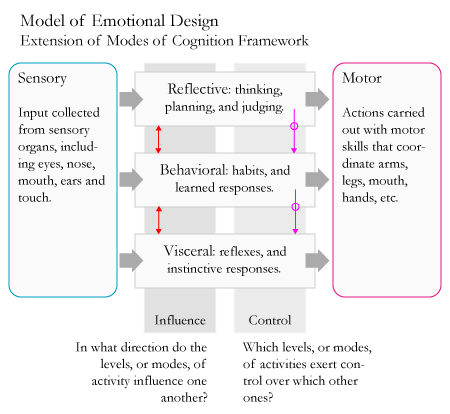

The model they developed aims to explain how different levels of our brain govern our emotions and behaviors. At the visceral level our brain is pre-wired to rapidly respond to events in the physical world by triggering physiological responses in our body. The behavioral level controls our everyday behaviors, including learned routines such as walking and talking. Lastly, the reflective level is responsible for cognitive processes related to contemplation and planning.

Emotions can arise at various levels and are created by a combination of physiological and behavioral responses that are influenced by reflective cognitive processes. An emotion like anger tends to be mostly visceral or behavioral in nature. However, indignation, which is a higher-level version of this emotion, is reflective in nature as well.

The main implication from this model is that our affective states have an impact on how we think. This important insight applies to thinking about the user’s affective state when using the product, and to how a user’s affective state will be impacted by use of the product. In regards to the former consideration, designers can take leverage an understanding regarding common physiological and emotional responses to stressful situations in order to design products that can be successfully used in such contexts.

A users’ experience with a product itself can also have impact on their affective states. High- and low-level emotions can influence all levels of cognitive activity, which is why a one’s visceral response to a product’s aesthetics can impact our behavior. On the other hand, the one’s higher cognitive functions control one’s lower level functions, which is why we can overcome our initial emotional responses if a product is effective enough.

The most common way that designers apply this model is by exploring the design considerations associated to each of the three levels. Visceral design encompasses considerations such as the aesthetics of the look, feel, smell and sound of the product. Behavioral design refers to considerations associated to the product’s usability. Reflective design is concerned with the meaning and value that a product provides within the context of a specific culture.

I consider this model to be an evolution of modes of cognition framework. The main change is that in the Emotional Model the “experiential” mode of cognition has been divided into distinct types of cognition: visceral and behavior. This revision enables the model to reflect the important role played by our emotional response to a product’s aesthetics.

[source: Interaction Design: Beyond Human-Computer Interaction; Don Norman’s book Emotional Design.]

** What the hell is ID FMP? **

Showing posts with label interaction. Show all posts

Showing posts with label interaction. Show all posts

Sunday, April 12, 2009

Sunday, March 29, 2009

ID FMP: External Cognitive Activities

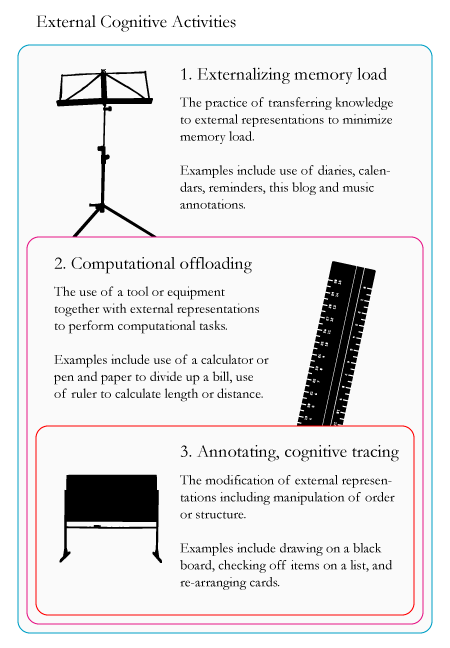

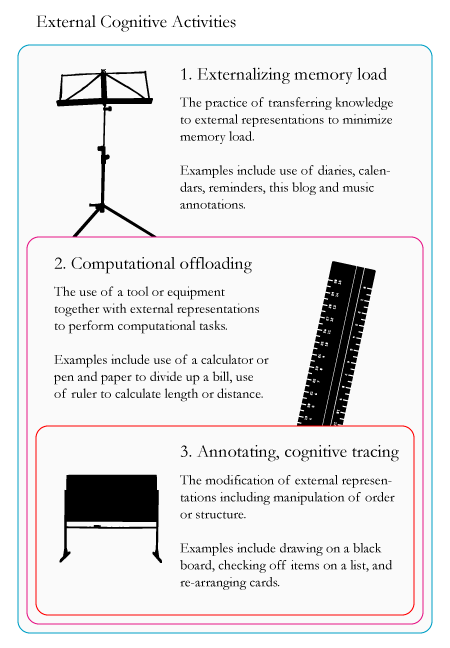

People often leverage artifacts and characteristics from their environment to reduce cognitive load and enhance their cognitive capabilities. External cognition refers to the activities that people use to support their cognitive efforts. These activities rely on: a wide range of artifacts such as computers, watches, pens and papers; characteristics of the environment such as visible landmarks, and signs; and other people. There are three main types of external cognition activities.

These three types of activities are heavily inter-dependent. In the diagram above they are listed from broadest to most specific. The externalization of memory load is the most basic external cognitive activity. It is involved in all types of external cognitive activities.

These three types of activities are heavily inter-dependent. In the diagram above they are listed from broadest to most specific. The externalization of memory load is the most basic external cognitive activity. It is involved in all types of external cognitive activities.

Computational offloading leverages memory externalization for the specific purpose of performing computational tasks. It is the next most basic external cognitive activity.

Annotation and cognitive tracing can be used to support both types of distributed cognitive activities mentioned above. This type of distributed cognition involves the manipulation or modification of memory and computational externalizations that impact the meaning of the externalizations themselves.

External cognitive activities are used to support experiential and reflective modes of cognition [more info on cognitive modes]. These activities rely on and support all types cognitive processes defined in my earlier post – attention, perception, memory, language, learning, and higher reasoning [more info on cognitive process types].

This framework of external cognitive activities complements the Information Processing model by identifying how people leverage their external environment to enhance and support their cognitive capabilities [more info on information processing model].

It also complements the model of interaction by providing additional insights regarding how people interact with the world (or system images) to support and enhance their cognitive capabilities. However, it does not provide insight into how people interact with systems for non-cognitive pursuits, such as physical and communication ones [more info on model of interaction].

[source: Interaction Design: Beyond Human-Computer Interaction]

** What the hell is ID FMP? **

These three types of activities are heavily inter-dependent. In the diagram above they are listed from broadest to most specific. The externalization of memory load is the most basic external cognitive activity. It is involved in all types of external cognitive activities.

These three types of activities are heavily inter-dependent. In the diagram above they are listed from broadest to most specific. The externalization of memory load is the most basic external cognitive activity. It is involved in all types of external cognitive activities.Computational offloading leverages memory externalization for the specific purpose of performing computational tasks. It is the next most basic external cognitive activity.

Annotation and cognitive tracing can be used to support both types of distributed cognitive activities mentioned above. This type of distributed cognition involves the manipulation or modification of memory and computational externalizations that impact the meaning of the externalizations themselves.

External cognitive activities are used to support experiential and reflective modes of cognition [more info on cognitive modes]. These activities rely on and support all types cognitive processes defined in my earlier post – attention, perception, memory, language, learning, and higher reasoning [more info on cognitive process types].

This framework of external cognitive activities complements the Information Processing model by identifying how people leverage their external environment to enhance and support their cognitive capabilities [more info on information processing model].

It also complements the model of interaction by providing additional insights regarding how people interact with the world (or system images) to support and enhance their cognitive capabilities. However, it does not provide insight into how people interact with systems for non-cognitive pursuits, such as physical and communication ones [more info on model of interaction].

[source: Interaction Design: Beyond Human-Computer Interaction]

** What the hell is ID FMP? **

Labels:

cognition,

communication,

don norman,

experiential,

external,

ID FMP,

interaction,

interaction design,

models

Friday, March 27, 2009

ID FMP: Information Processing Cognitive Model

One of the most prevalent metaphors used in cognitive psychology compares the mind to an information processor. According to this perspective, information enters the mind and is processed through four linear stages that enable users to choose an appropriate response.

Though this model offers insights into how people process information, it is limited by its exclusive focus on activities that happen in the mind. Most of our cognitive activities involve interactions with people, objects, and other aspects of the environment around us. In other words, cognition does not take place only in the mind.

In my next ID FMP post I will cover the external cognition framework that describes external cognitive activities; and distributed cognition models that attempt to map all internal and external activities. Here’s how this model aligns to the frameworks, models, and principles that I have explored over the past several weeks.

The cognitive activities modeled by Information Processing framework above can be mapped to the mental activities outlined in Norman’s Model of Interaction. At a high level, Norman’s model provides additional insights regarding the mental activities that take place and it features the external environment as an important, though unexplored, element. Here is a brief overview of how the phases from this model relates to the interaction one:

Here is how this model aligns with the framework regarding the relationship between a designer’s conceptual model and a user’s mental model. The focus of the Information Processing model is on the cognitive processes that occur in the user’s mind when they are interacting with the world. These processes are closely related to mental models in two ways:

The Conversation Turn Taking Model is related to the Information processing model in a broad sense only. The turn taking framework focuses on explaining an external phenomenon related to language and communication that is driven by the cognitive functions described in the Information Processing model. They do not contradict one another nor do they directly support each other.

The Information Processing model can be applied to both reflective and experiential modes of cognition, though the phases involved in each mode differ. Reflective cognition tends to be active during the “comparison” and “action selection” phases. On the other hand, experiential cognition can be active across all phases depending on the type of interaction.

The chart below provides an overview regarding which cognitive process types are involved with each phase of the Information Processing model.

[source: Interaction Design: Beyond Human-Computer Interaction]

** What the hell is ID FMP? **

Though this model offers insights into how people process information, it is limited by its exclusive focus on activities that happen in the mind. Most of our cognitive activities involve interactions with people, objects, and other aspects of the environment around us. In other words, cognition does not take place only in the mind.

In my next ID FMP post I will cover the external cognition framework that describes external cognitive activities; and distributed cognition models that attempt to map all internal and external activities. Here’s how this model aligns to the frameworks, models, and principles that I have explored over the past several weeks.

The cognitive activities modeled by Information Processing framework above can be mapped to the mental activities outlined in Norman’s Model of Interaction. At a high level, Norman’s model provides additional insights regarding the mental activities that take place and it features the external environment as an important, though unexplored, element. Here is a brief overview of how the phases from this model relates to the interaction one:

- “Input encoding” maps to “perception”

- “comparison” encompasses “interpretation” and “evaluation”

- “response selection” corresponds to “intention” and “action specification”

- “response execution” maps to “execution

Here is how this model aligns with the framework regarding the relationship between a designer’s conceptual model and a user’s mental model. The focus of the Information Processing model is on the cognitive processes that occur in the user’s mind when they are interacting with the world. These processes are closely related to mental models in two ways:

- First, mental models provide the foundation for people to understand their interactions with the world and select appropriate responses.

- Second, mental models evolve as people evaluate the impact of their own actions and other events on the world.

The Conversation Turn Taking Model is related to the Information processing model in a broad sense only. The turn taking framework focuses on explaining an external phenomenon related to language and communication that is driven by the cognitive functions described in the Information Processing model. They do not contradict one another nor do they directly support each other.

The Information Processing model can be applied to both reflective and experiential modes of cognition, though the phases involved in each mode differ. Reflective cognition tends to be active during the “comparison” and “action selection” phases. On the other hand, experiential cognition can be active across all phases depending on the type of interaction.

The chart below provides an overview regarding which cognitive process types are involved with each phase of the Information Processing model.

[source: Interaction Design: Beyond Human-Computer Interaction]

** What the hell is ID FMP? **

Labels:

cognition,

communication,

don norman,

experiential,

ID FMP,

interaction,

interaction design,

models,

reflective

Sunday, March 22, 2009

ID FMP: Model of Interaction

There are many theories that attempt to describe the cognitive processes that govern users’ interactions with products systems. Here I will focus on a model developed by Don Norman, which was outlined in his book Design of Everyday Things. This framework breaks down the process of interaction between a human and a product into seven distinct phases.

Seven Phases of Interaction with a Product System

Seven Phases of Interaction with a Product System

Now let’s put this theory into context with some of the concepts and models that we’ve encountered thus far. First, I want to point out that this model aligns with Don Norman’s model regarding the relationship between a designer’s conceptual model and a user’s mental model [read more here]. The focus of this framework is the interaction between the system image, the product’s interface where user interaction happens, and the user’s mental model, the user’s understanding of how the product works which governs the user’s interpretation, evaluation, goals, intention, action specification.

I’ve extended Norman’s original model to account for the reflective cognition that is also involved in peoples’ interactions with products. Reflective cognition governs peoples’ higher-level evaluations, goals and intentions that ultimately drive peoples’ experiential cognition activities. Experiential cognition governs the second-by-second evaluations, goals, and intentions involved in peoples’ interactions with products. These two different modes of cognition are explored in greater detail here.

I’ve extended Norman’s original model to account for the reflective cognition that is also involved in peoples’ interactions with products. Reflective cognition governs peoples’ higher-level evaluations, goals and intentions that ultimately drive peoples’ experiential cognition activities. Experiential cognition governs the second-by-second evaluations, goals, and intentions involved in peoples’ interactions with products. These two different modes of cognition are explored in greater detail here.

Here is an example to distinguish and highlight the interdependencies between these two different types of cognition and interaction. Let’s consider a person’s interaction with a car. In this scenario, a person’s reflective cognitive would include setting a goal such as choosing a destination and desired time of arrival, and evaluating what route to take based on understanding of current location and traffic patterns. These activities would govern a person’s experiential interactions with a car and drive their moment-by-moment evaluations, and creation of goals and intentions. Experiential interactions would include using the steering wheel to turn a corner or switch lanes, pressing the accelerator to speed up, and stepping on the breaks to stop the car.

How does the concept of mental models relate to this framework? The mental model itself is not represented by a single phase, or grouping of phases. It refers to the understanding that a user has of how a system works. Norman’s model was developed to describe how users interact with product systems on an experiential, minute-by-minute basis. At this level of interaction a user’s mental model drives their interpretations, evaluations, setting of goals and intentions, and specification of actions.

Now let’s explore how the different cognitive types come into play during the various phases of interaction. These cognitive types have been outlined in greater detail here.

** What the hell is ID FMP? **

Seven Phases of Interaction with a Product System

Seven Phases of Interaction with a Product System- Forming the goal

- Forming the intention

- Specifying an action

- Executing the action

- Perceiving the state of the world

- Interpreting the state of the world

- Evaluating the outcome

Now let’s put this theory into context with some of the concepts and models that we’ve encountered thus far. First, I want to point out that this model aligns with Don Norman’s model regarding the relationship between a designer’s conceptual model and a user’s mental model [read more here]. The focus of this framework is the interaction between the system image, the product’s interface where user interaction happens, and the user’s mental model, the user’s understanding of how the product works which governs the user’s interpretation, evaluation, goals, intention, action specification.

I’ve extended Norman’s original model to account for the reflective cognition that is also involved in peoples’ interactions with products. Reflective cognition governs peoples’ higher-level evaluations, goals and intentions that ultimately drive peoples’ experiential cognition activities. Experiential cognition governs the second-by-second evaluations, goals, and intentions involved in peoples’ interactions with products. These two different modes of cognition are explored in greater detail here.

I’ve extended Norman’s original model to account for the reflective cognition that is also involved in peoples’ interactions with products. Reflective cognition governs peoples’ higher-level evaluations, goals and intentions that ultimately drive peoples’ experiential cognition activities. Experiential cognition governs the second-by-second evaluations, goals, and intentions involved in peoples’ interactions with products. These two different modes of cognition are explored in greater detail here.Here is an example to distinguish and highlight the interdependencies between these two different types of cognition and interaction. Let’s consider a person’s interaction with a car. In this scenario, a person’s reflective cognitive would include setting a goal such as choosing a destination and desired time of arrival, and evaluating what route to take based on understanding of current location and traffic patterns. These activities would govern a person’s experiential interactions with a car and drive their moment-by-moment evaluations, and creation of goals and intentions. Experiential interactions would include using the steering wheel to turn a corner or switch lanes, pressing the accelerator to speed up, and stepping on the breaks to stop the car.

How does the concept of mental models relate to this framework? The mental model itself is not represented by a single phase, or grouping of phases. It refers to the understanding that a user has of how a system works. Norman’s model was developed to describe how users interact with product systems on an experiential, minute-by-minute basis. At this level of interaction a user’s mental model drives their interpretations, evaluations, setting of goals and intentions, and specification of actions.

Now let’s explore how the different cognitive types come into play during the various phases of interaction. These cognitive types have been outlined in greater detail here.

- Attention supports all phases of interaction from perception through to action execution. This cognitive process refers to a user’s ability to focus on both external phenomena and internal thoughts.

- Perception is clearly called out as its own phase in Don Norman’s model.

- Memory plays an important role during all phases from interpretation through to action specification.

- Language supports communication throughout all phases of a person’s interaction with a product. Here I refer to both verbal and visual languages.

- Learning enables people to use new products and increase effectiveness and efficiency in their interactions with existing products. This cognitive process supports all phases between the interpretation and action specification.

- Higher reason governs all activities related to the setting of high-level goals and intent, and driving evaluations.

** What the hell is ID FMP? **

Labels:

cognition,

communication,

don norman,

experiential,

ID FMP,

interaction,

interaction design,

models,

reflective

Friday, February 13, 2009

Wearable Projection-Based Interface

Earlier today I came across a post on Wired about a new mobile device prototype that has been unveiled at TED. Designed by MIT Media Lab students, this device uses a projector to display the interface that is manipulated using gestures captured by a video camera. Video demos from Wired are featured below.

In many ways this prototype is similar to the Holographic Projection Interface that I wrote about back in October. The main differences about this design include that it uses normal projection technology rather than a holographic one which is not yet available, and that it leverages internet connectivity to provide contextually relevant information above and beyond GPS navigation. It is also impressive that they have managed to create an actual prototype using $350 worth of equipment - though they are far from creating a commercially viable product, it is pretty impressive prototype.

[via Wired]

In many ways this prototype is similar to the Holographic Projection Interface that I wrote about back in October. The main differences about this design include that it uses normal projection technology rather than a holographic one which is not yet available, and that it leverages internet connectivity to provide contextually relevant information above and beyond GPS navigation. It is also impressive that they have managed to create an actual prototype using $350 worth of equipment - though they are far from creating a commercially viable product, it is pretty impressive prototype.

[via Wired]

Subscribe to:

Posts (Atom)