This assignment was taken from the third chapter of the book

Interaction Design: Beyond Human-Computer Interactions, written by Helen Sharp, Jenny Preece, and Yvonne Rogers.

Assignment QuestionsQuestion A: first elicit your own mental model. Write down how you think a cash machine (ATM) works. Then answer the questions below. Next ask two people the same questions.

- How much money are you allowed to take out?

- If you took this out and then went to another machine and tried to withdraw the same amount, what would happen?

- What is on your card?

- How is the information used?

- What happens if you enter the wrong number?

- Why are there pauses between the steps of a transaction?

- How long are they?

- What happens if you type ahead during the pauses?

- What happens to the card in the machine?

- Why does it stay inside the machine?

- Do you count the money? Why?

Question B: Now analyze your answers. Do you get the same or different explanations? What do the findings indicate? How accurate are people’s mental models of the way ATMs work? How transparent are the ATM systems they are talking about?

Question C: Next, try to interpret your findings in respect to the design of the system. Are any interface features revealed as being particularly problematic? What design recommendations do these suggest?

Question D: Finally, how might you design a better conceptual model that would allow users to develop a better mental model of ATMs (assuming this is a desirable goal)?

Assignment Answers

Question A

Here’s My TakeHere is my understanding regarding how an ATM functions. The user owns a card that has a magnetic stripe that holds his/her account number. To execute a transaction using an ATM, first the user has to insert his card in the appropriate slot for the machine to read the user’s card number. Next, the user is prompted to input a four-digit pin number to access the account.

Once the pin number is entered the ATM machine connects to a central server via the internet and authenticates the user. If authentication succeeds, then the ATM machine remains connected to the server to enable the user to access various services such as viewing account balance, funds withdrawal or deposit, and potentially account transfers. When the user performs an action on his account, the ATM machine communicates with the server to execute the command.

For security purposes the ATM machine will request that the user re-enter his pin number every time s/he requests to execute a new action, e.g. withdrawing money. Other security features include that the ATM machine asks the user whether s/he is ready to quit after every transaction; it also automatically logs off a user after a short period of inactivity.

Take from Subject One Card is entered and account is confirmed after pin number entry. The amount entered is calculated in terms of number of bills usually of $20 denomination and spit out at you, and appropriate debits are made on the account. You are then told to have a nice day. Meanwhile hardly noticed by you is that your bank, the bank that owns the atm, and perhaps the operator of the atm has embezzled “so-called” fees from your account.

Take from Subject TwoATM works like a computer. Your ATM card is like an activation key only usable with the right password. If you don't provide the right password, the machine will eat it. The ATM uses software programmed by the bank (so I guess every bank's ATM is slightly different for that reason) and depending on which button you choose for what to do next, it does various things. So I guess you can think of the ATM like a road to search for treasure... Your cash is the ultimate treasure and what you do from the moment you stand in front of the ATM until you get the actual cash is like your path in search for the treasure. The ATM is also networked, so someone is always watching your every move.

[click on the charts to enlarge them]

Question B

For the most part everyone has a pretty accurate mental model regarding how an ATM works. All of us understand that the services provided by ATMs are accessed using a card with a corresponding pin number. Another shared understanding is that ATM services are enabled by connections to bank databases where transactions are authorized and captured.

The biggest difference between the each explanation was the focus of the author. I focused on providing a technical/systems description of how an ATM works; subject one’s description covered user experience elements such as frustrations with excessive bank fees; subject two provided an overview that from a much looser metaphorical perspective. Otherwise, there were small differences related to each person’s understanding about specific elements of the user experience (e.g. amount money that can be taken out, reasons for delay, response to wrongful input, etc).

These findings indicate that most people in my social circle have accurate mental models of the way in which ATM machines work. This seems to suggest that the way ATM systems work is, for the most part, transparent. However, there are certain elements of the interaction about which the users still lack clarity or dislike, these include: the amount of money that can be taken out; the total value of the fees being applied to the account; and the inability to count the money when the ATM is in a public place.

Question C

For the most part, users have a good understanding regarding how ATM systems work. Therefore, the improvement opportunities to address user issues are mostly small and incremental in nature (e.g. addressing the small information gaps). This is not to say that new technologies, concepts and approaches could not be used to improve the experience of using an ATM in ways that current users cannot envision.

Here are a few design recommendations to address the three design gaps identified between system image and the user’s mental model:

- Lack of clarity regarding the amount of money that can be taken out. Possible solution includes: providing users with information regarding their daily withdrawal limit (as well as any ATM specific limits). This issue is only present when using ATM machines that are not from the issuing bank.

- Lack of clarity regarding the total value of the fees being applied to the account. Possible solution includes: providing users with information regarding ATM and bank fees applied to transactions. This issue is only present when using ATM machines that are not from the issuing bank.

- The inability to count the money when the ATM is in a public place. Possible solutions include: create cash dispensers that leverage arrangement of bills and time delay to enable users to count the money in the tray while it is being dispensed.

Question D

Many advances have taken place in the design of ATM systems over the past several years. The new ATM from Chase Bank in New York is a great example of a well-designed ATM system. It has several notable improvements from older systems including easy, envelope-free, deposits, and improved touch screen interfaces.

Here are a few areas related to the conceptual model of ATM systems that offer opportunities for improvements:

Access to services provided by ATMUsing presence awareness technology, similar to that available on luxury car models, banks could design ATM machines that can identify the user without the need for a card. Users would have a key (rather than card) that contains an RFID chip, or similar technology. Therefore, when a user approaches a machine s/he would be prompted to enter their pin without the need to insert a card.

Rather then focus on improving the experience of using ATM machines, it is also valuable to explore how to provide the same services using different channels. Cell phones offer a lot of promise in this area. Many people already prefer to use their cell phones to check their account balance when they are on the go. Money transfers and payment by cell phone is becoming more widely available across the world.

Despite the increased use of electronic forms of payment, there are still many types of transactions for which people need cold hard cash. From a cash withdrawal and deposit standpoint, no alternatives exist to having a physical device such as an ATM (other than cash back services available at select stores that accept debit cards). For these types of transactions the cell phone could be used to enhance the existing experience. Perhaps using Bluetooth technology it could serve as the key to support the presence awareness described above. It could also provide the user with a confirmation or electronic receipt of their transaction, including all relevant fees.

Security of services provided by the ATMNew types of technologies can be leveraged to improve the security of ATM systems. Fingerprint or other bio-authentication methods could replace the pin, which would not only provide increased security, but also reduce the cognitive load required to memorize the pin number (or rather, all of your pin number and passwords). Of course, this would mean that you can no longer take out money from your significant other’s ATM card.

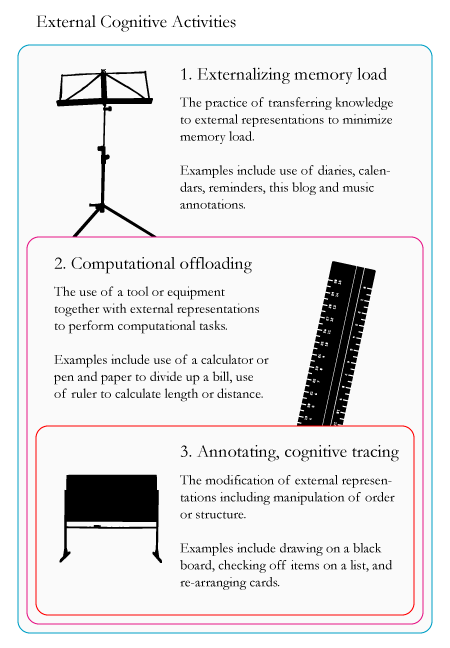

These three types of activities are heavily inter-dependent. In the diagram above they are listed from broadest to most specific. The externalization of memory load is the most basic external cognitive activity. It is involved in all types of external cognitive activities.

These three types of activities are heavily inter-dependent. In the diagram above they are listed from broadest to most specific. The externalization of memory load is the most basic external cognitive activity. It is involved in all types of external cognitive activities.